Finding the Project Manager Capacity Sweet Spot

Discover how effective capacity planning and project velocity management help project managers balance multiple projects without burnout, ensuring...

Master the Metal Toad project life cycle with our detailed guide on effective project management techniques for successful project completion.

We're in the middle of interviews for our open project manager position and we've been talking to a lot of great candidates, all with diverse project process backgrounds at various companies. Not to toot our own horn too much, but one thing that I've realized as a result is just how great our project life cycle model is! We've made great strides in the last several years, and at this point we have it dialed-in for the type of projects that come through our door.

Without further ado, in the spirit of open-sourcing not only our software contributions but also our processes, here's what we do and why it works:

When a sales lead is vetted, our strategy team wrangles as many details from the potential client as possible, and also suggests a big range of new ideas to consider. This helps us figure out what on earth it is they want us to do and what problems technology can actually solve for them. Sometimes it's crystal clear documentation, feature lists, and designs or wireframes. Other times it's a 5 item bullet list scribbled on a napkin. Regardless, when our sales team says it's time to estimate, we jump to it!

Our production team tries to reduce the sales workload on developers as much as possible by turning the sales info into a line-item spreadsheet with relevant details to hand off to a developer. This leaves our developers to focus on the big unknowns and to research specific solutions, instead of counting templates or re-estimating things like server setup that happen on every project. To help with accuracy, we even have an ala carte menu of regularly estimated items that we can accurately predict, especially for Drupal 7 projects. Often an estimate spreadsheet makes it to our estimating developers needing only some thinking and API review or some test code.

Completed estimates make their way back to the sales team, along with any caveats, risks, and unknowns. Our developers almost always go with ranged low-high estimates, with low being the absolute best case estimate, and high being if everything blows up along the way. As a result, it's on the sales team to understand the overall risk of the project and determine which estimates to go with to close the sale. We send our proposals to clients with the detailed estimate spreadsheet so they can understand the in-depth look we take at their project even before having sold the work. We also provide a couple important caveats to clients:

We often come in at the higher end of competitive bids for two reasons: 1) We provide a superior level of customer service and project management, and 2) we take the time to estimate down to minute details on a project and capture everything we possibly can in the initial estimate. We've lost more than one sale following an initial presentation and estimate only to have a client come back to us for help to save their project that went off the rails with a "cheaper" shop.

We closed a sale. Woo hoo! Now comes more fun stuff. Usually things kick off with some discovery, wireframes, designs, and technical architecture planning. We like to make sure we're involved in the design process when possible, because while designers love to shoot for the moon, they're not always particularly scope-conscious or may not understand the complexities of development required for their designs. We can usually suggest simple design changes to save clients buckets of money (or in some cases, just reign things in to match the scope) without compromising the overall design aesthetic.

While designers are designing, we're spec writing. We produce a very robust tech spec that essentially says a) what we're going to build, b) how it's going to function, and c) the basics of how we're going to build it. This can't be completed until designs are final, because the designs inform it to a great degree. We don't touch development beyond perhaps a base site install until we have client approval on the tech spec; it's always cheaper to redo text in a Word document than it is to redo code.

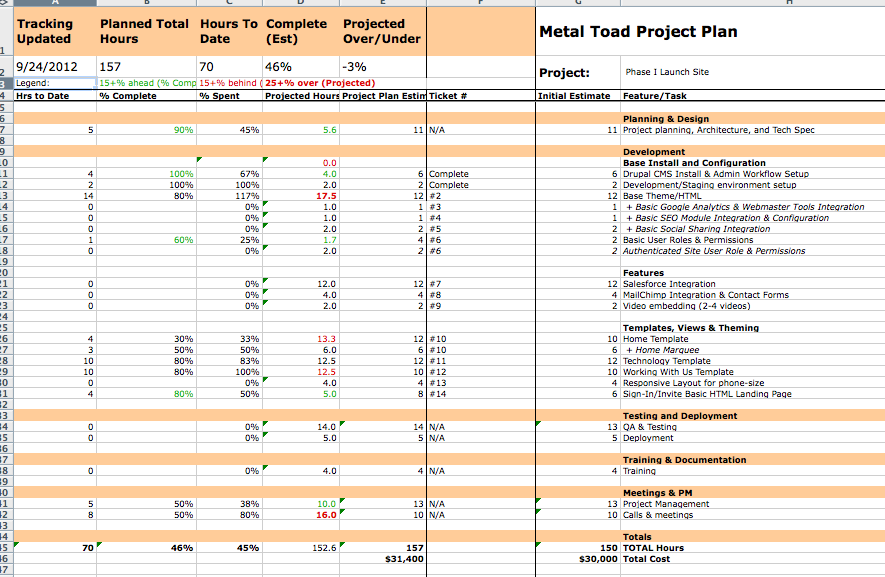

Then comes a critical step - the project plan! Without this document, we're powerless. It's the single-most enabling document a Metal Toad project manager has in their arsenal. The PM works with the developer(s) assigned to the project to re-estimate the entire project now knowing all the details, which is often much more than what was known when we sold the project. The new estimates help us confirm several things:

Once the project plan is reconciled, we're good to go. Project managers create tickets relating to each specific task for development, tie those tickets to the project plan estimates, and track numbers like hawks throughout the course of development to project the potential over/under on specific tasks and on the project as a whole.

Then we do the actual development. There's some voodoo magic that happens in our developer pit and a website is born. Bam! Easy. We turn around projects fast. To give you a reference point, we are somewhere between a snake and a mongoose… and a panther.

"Ewwww… quality assurance is kind of boring and tedious!" WRONG. It's incredibly boring and tedious. But it's crucial. We've improved a lot in this arena process-wise, and there's always further to go with improvements when it comes to QA process. We have a thorough process and testing plan that results in three rounds of multi-dimensional QA.

The test plan dimensions are:

The QA Rounds are:

Considering that QA can be never-ending and there is always another bug to be found if you look hard enough, we don't cross-test every test plan dimension over every round of QA. Instead, our goal is to get through All pages, content, and images in all browsers by the time we get through all rounds of QA. If we catch regressions along the way, we go back and retest for those specific regressions across browsers/devices. Just like our development, QA is all about progressive improvement. Once a dropdown menu works in one browser we'll test it in the rest, but it'll never be an efficient use of time to confirm something is broken in 18 different browsers.

It's time to launch. Deploy! Deploy! Deploy! We push the big red launch button, and either the site is live or things have blown up. No sweat!

Well, we got a site launched, so it can't have been THAT bad. Regardless, we schedule a retrospective for every project so that hopefully we can learn something from the project. Common retrospective discussion surrounds:

Once the project is complete, we also grade ourselves (both project managers and developers) individually across a variety of metrics including client satisfaction, budget, scope & timeline management, responsiveness to clients and effective communication, project quality, code quality, estimation, and more. We self-grade on those metrics and then other internal project stakeholders also grade us. The idea is that the two grades should roughly align, and if not a discussion is had around where perceptions differ and why.

This all comes together to form a complete picture of where we can improve on both a personal and company level. It also results in kudos to the team for a job well done and areas where individuals excelled. Then it's on to the next project, which should flow even more seamlessly than the last, because we're always trying to get better!

So there you go. Nothing totally revolutionary, but the subtle details and a great deal of team experience are the crucial parts. 60 percent of the time it works every time. Your milage may vary, especially if you don't add our Metal Toad secret sauce to the equation. Now go give it a try!

Discover how effective capacity planning and project velocity management help project managers balance multiple projects without burnout, ensuring...

Discover the journey of Hayli Hay, Metal Toad's Director of Project Management, and her insights into effective project management and leadership.

Insights from the inaugural Digital Project Management Summit, focusing on communication, collaboration, and skills essential for project management.

Be the first to know about new B2B SaaS Marketing insights to build or refine your marketing function with the tools and knowledge of today’s industry.