OpenAI has officially released GPT‑5, marking a shift in strategy by offering not just a single model but a tiered lineup: Standard, Mini, and Nano. According to TechCrunch, GPT‑5 represents the first “unified” model in OpenAI’s history, combining the reasoning capabilities of its larger models with the speed and responsiveness of its lighter-weight offerings.

This release aims to provide more flexibility to developers and businesses with distinct performance and cost requirements.

Tiered Models for Different Needs

Each of the three model sizes targets a different use case:

-

Nano is optimized for speed and lightweight deployment, ideal for mobile apps or edge devices.

-

Mini offers a middle ground, balancing inference speed with more robust reasoning.

-

Standard delivers the most advanced capabilities, including deeper reasoning and complex task execution.

All models share the same core multi-modal foundation, supporting both text and image inputs natively. This enables broader application possibilities across domains like visual QA, document analysis, and code generation.

Expanded 400K Context Window

One of the most practical updates is the increase in context length—from 128,000 tokens to 400,000 tokens across all three models. While this doesn’t match the massive context windows seen in some competitors (GPT‑5 reportedly supports over 1 million tokens internally), it provides a usable middle ground that accommodates long documents, multi-step instructions, and extended user sessions without requiring extreme compute resources.

Competitive Pricing, Especially for Nano

OpenAI appears to be positioning its new lineup competitively on pricing. The Nano variant, in particular, is designed to be both fast and cost-effective, likely aimed at high-volume, latency-sensitive applications.

As TechCrunch notes, “Mini (for low cost)” and “Nano (high speed)” allow developers to choose the right tool for the job based on cost and performance constraints—something that has become increasingly important as inference costs play a larger role in GenAI deployment decisions.

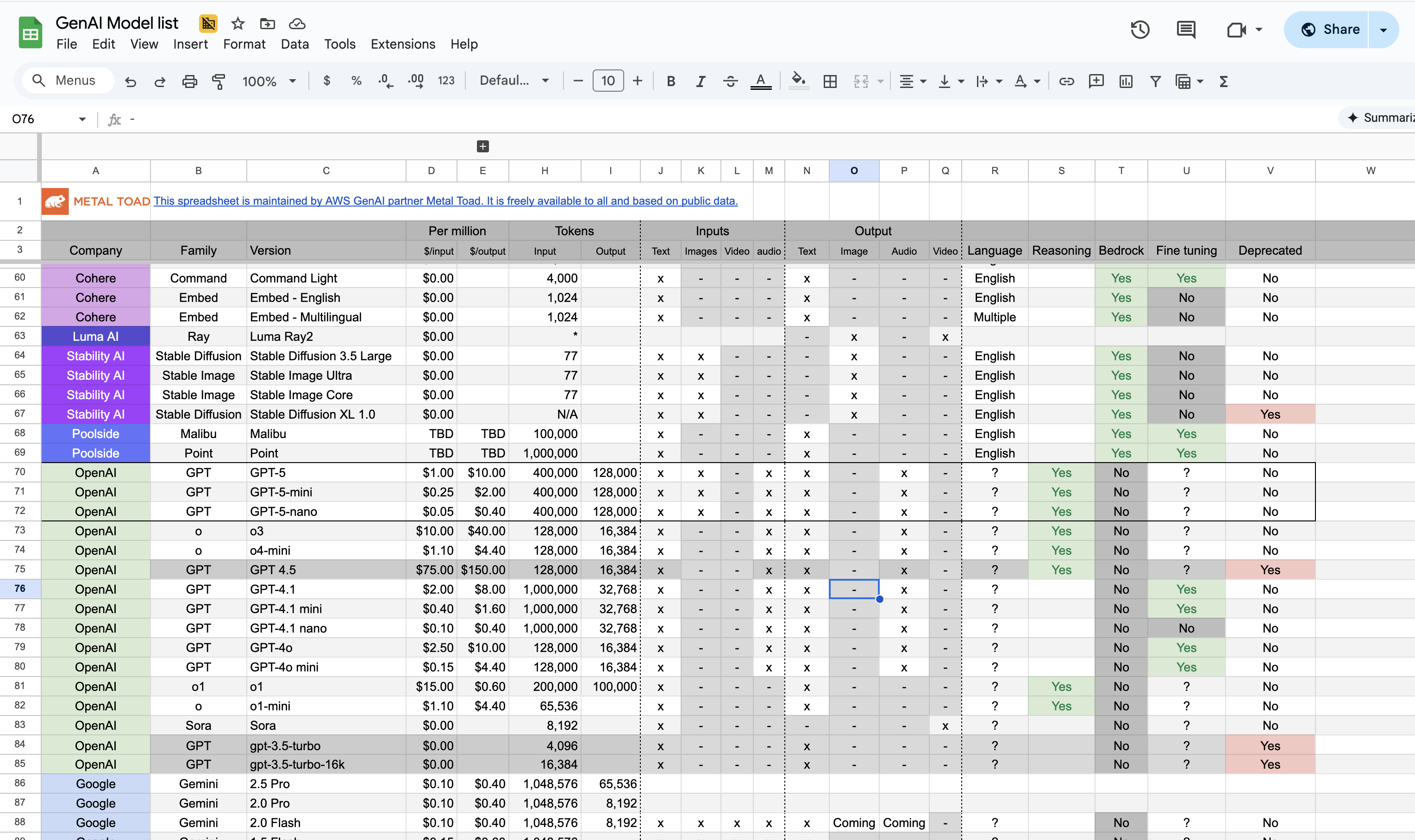

I've added the pricing into my GenAI Model list spreadsheet:

Multi-Modal Capabilities Now Standard

All three GPT‑5 variants support multi-modal input, enabling the use of images alongside text in prompts. This unified architecture simplifies development for teams working on applications involving visual content, and aligns with broader industry trends toward multi-modal generalist models.

Reasoning vs. Speed Trade-Off

The standard trade-offs between model size and performance remain. Smaller models offer faster response times and lower operational costs, while larger models demonstrate better reasoning, memory, and adaptability to complex tasks. OpenAI’s tiered approach makes these trade-offs more transparent, helping users match the model to their use case more intentionally.

TechCrunch emphasizes that GPT‑5 allows ChatGPT to now complete “a wide variety of tasks on behalf of users—such as generating software applications, navigating a user’s calendar, or creating research briefs.” The underlying architecture enables more seamless tool use and real-time context handling, depending on the model selected.