When we in the Drupal community talk about scalability, it's most often in terms of handling high numbers of visitors. An equally important dimension, that to our detriment we often overlook, is scaling with larger datasets.

One of the biggest problems I see is a pattern of loading all of a module's data at once, regardless of size. Two examples:

Drupal core has a built-in assumption that menus and vocabularies are small. For most projects it's a valid assumption, but if you push the limits on these components the results can be disastrous.

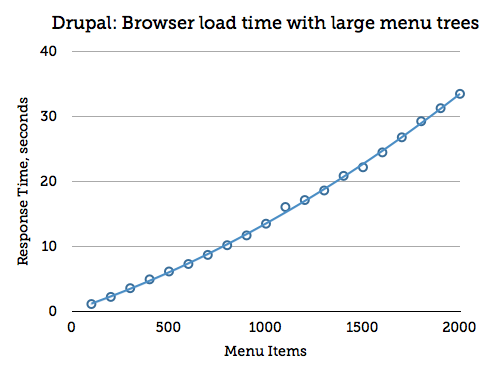

This one is particularly bad. The time needed to load /admin/structure/menu/manage/%menu is Θ(N2), because of the way Drupal's tabledrag system works. The practical limit is around 500 menu items.

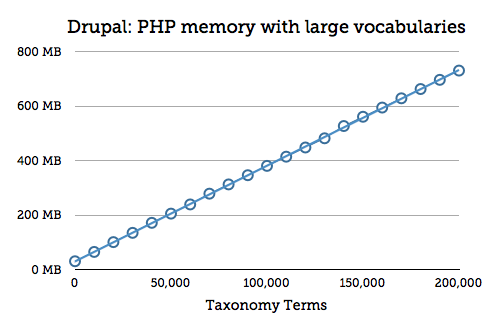

Similarly, memory consumption on /admin/structure/taxonomy/tags grows linearly with vocabulary size. The page load time is impacted less, because terms are listed with pagination. In my experience performance problems will start to crop up beyond about 20,000 terms.

Are these limits reasonable? For perspective, here are a few published ontologies:

| Library of Congress Subject Headings (LCSH) |

250,000 items |

| Species in NCBI taxonomy |

357,590 items |

| Medical Subject Headings (MeSH) |

177,000 items

|

Solutions

Unfortunately, there's not much the typical site builder can do about these problems. The best advice I can offer is to plan your project carefully, and if you anticipate any “big” datasets then do some benchmarking before committing fully.

For module authors, I urge you to think ahead when designing you code. Try to think in extremes. How will your program behave if installed on a site with a million nodes or users? Or 1,000 fields?

- Use pagination where appropriate.

- Don't write API functions that load more data than needed.

- Avoid scenarios where datasets are multiplied together. An example is field formatters; use formatter settings instead of creating individual formatters for all possible option combinations.

- Use AJAX to lazy-load data

- Benchmark your code with more “stuff” than you think is reasonable. Document the scalability limits where you find them.